Building a Product Service API with Claude 3.7 Sonnet 🤖

Artificial intelligence is shaking up the tech world faster than ever, with tons of SaaS tools now letting anyone build apps using just plain language. These tools make it super easy for non-coders to quickly bring their ideas to life. But while they’re awesome for rapid prototyping, they sometimes miss the mark on creating scalable, maintainable, production-ready systems.

There’s a lot of talk about AI taking over IT jobs — especially programming — but instead of replacing us, AI can be a great sidekick. It handles the routine stuff, freeing up engineers to focus on creative problem-solving and high-level design.

In this blog post, I’m teaming up with AI to build a complete product service API from scratch. Using the VS Code Cline plugin along with the Anthropic Claude 3.7 Sonnet Model via Cline Bedrock, I’ll guide the model to follow top-notch cloud and software engineering practices. The project comes with solid Jest unit tests and thorough Postman end-to-end tests to ensure it’s truly enterprise-grade.

I let AI generate every part of the project while I steer the process and make sure everything meets high standards. Join me as we explore how combining human know-how with AI-driven automation can reshape the future of software development. Plus, I’ll wrap things up by comparing the total costs with what it would take for a single developer to build the same project manually.

What is VSCode?

Visual Studio Code is a free, open-source code editor developed by Microsoft that offers a lightweight yet powerful environment for coding, debugging, and version control. With extensive extension support, it’s customisable to suit various programming languages and workflows, making it a popular choice for developers on Windows, macOS, and Linux.

What is the Cline Plugin?

The Cline plugin is a Visual Studio Code extension that leverages AI to automate project generation and coding tasks. It integrates with models like Anthropic’s Claude 3.7 Sonnet and AWS Bedrock, enabling developers to quickly scaffold projects, generate code, and implement best practices — all through natural language prompts.

What is Claude 3.7 Sonnet?

Claude 3.7 Sonnet is an advanced language model developed by Anthropic, renowned for its exceptional ability to generate high-quality, production-ready code. Optimised for clean, efficient outputs that adhere to industry best practices, it’s widely regarded as one of the top LLMs available for code generation today.

VSCode Setup ⚙️

The first step is to configure the Cline plugin in VS Code to interface with the AWS Bedrock API, which will send prompts to the Anthropic Claude 3.7 Sonnet language model. For this, we provide the AWS account credentials, specify the region as us-east-1, select to use cross-region inference and set the model as anthropic.claude-3–7-sonnet-20250219-v1:0.

We also set the custom instructions for the model as the following, ensuring the model generates code based on industry and cloud best practices and personal preferences.

All code should be generated with the following instructions:

1. Clean code with ports and adapters.

2. Code through interfaces.

3. SOLID principles.

4. AWS well architected best practices.

5. Microsoft common data model.

6. Should follow AWS CDK best practices when writing CDK code.

7. Any DynamoDB tables should be implemented with single table design.

8. lambda functions should be written in Node.js using the NodeJS L2

CDK construct.

Initial Prompt 📝

Now that the Cline plugin is configured, it’s time to leverage Claude 3.7 Sonnet for project generation. I begin in plan mode with the following prompt in plan mode.

“Generate a Product API CDK project. The API should provide all CRUD operations, should implement the Microsoft common data model for products, include strict validation, include unit tests using jest, should use Postman and newman for end to end tests.”

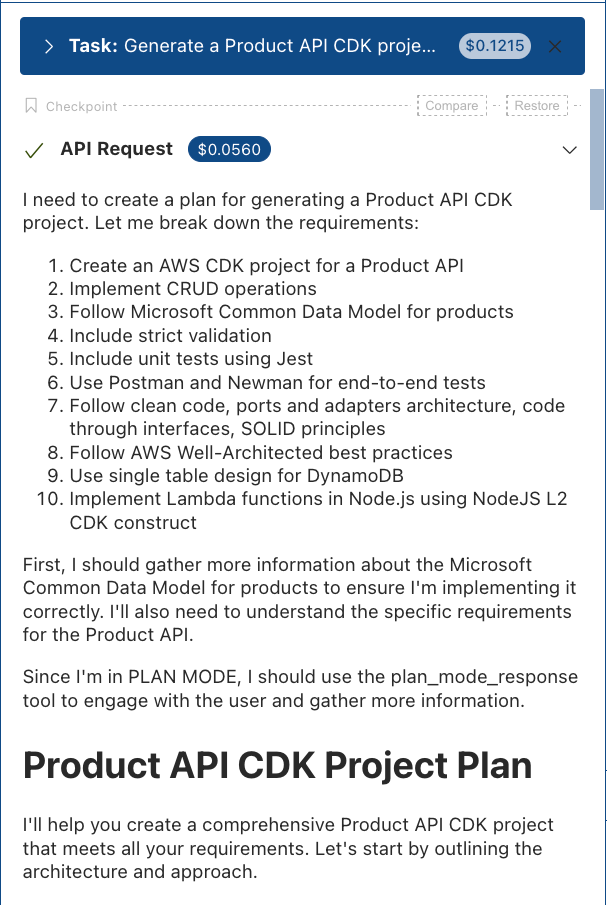

Cline makes the request to the Claude LLM to start planning the project resulting in the following plan. I initiate the project in plan mode to review how Claude intends to generate the project, allowing me to assess the approach before fully committing and incurring costs.

The image above shows that the language model generates a comprehensive plan based on my initial instructions. The final section of the plan includes several clarifying questions aimed at refining the project’s quality. In response, I provided additional guidance to incorporate OAuth with Amazon Cognito for user authentication, and to implement CloudWatch alarms and metrics for the API and Lambda functions, all in accordance with AWS best practices and recommendations. The additional instructions were as follows:

“Use Amazon Cognito to provide OAuth2 authentication and authorisation. Ensure CloudWatch alarms are created for API Gateway and AWS Lambda following AWS best practices and recommended alarms.”

Project Plan Update 📋

With the additional instruction, Claude provides an updated project plan.

Claude delivers an even more refined plan for the project, complete with an architectural flow diagram and a comprehensive implementation strategy. With this blueprint in hand, the next step is to put the plan into action!

Acting on the plan 🎭

With the plan in place, I switched the Cline task to Act mode. This enabled the Claude model to execute the strategy and generate all project files according to the detailed implementation plan.

The Claude output during project generation was as follows:

With auto-approve enabled, the project was generated remarkably fast — taking only a few minutes, up to five at most. Claude created a complete Product API service that adheres to code and CDK best practices, producing a modular and readable codebase based on clean code principles and a ports-and-adapters architecture. Following my custom instructions, it also generated well-crafted unit tests and Postman tests using Newman, and implemented a CloudWatch dashboard, along with API and Lambda metrics and alarms aligned with AWS recommendations. The next step was to try to deploy the service to an AWS test account and manually test the service.

The Product Service API project and all code generated by Claude 3.7 sonnet can be found in the GitHub Product Service repository.

Initial Deployment 🧨

While inspecting the codebase, I noticed an error in one of the files where the uuid module was imported — it appeared to be the familiar issue of a missing type definition for the uuid package. This was likely due to the Cline terminal integration not working, meaning Cline couldn’t capture the results of any package installations during the act phase. I resolved this by installing the missing package.

The next issue I encountered was the absence of the cdk.json file. During project creation, Claude attempted to use the AWS CDK CLI to generate the project; however, because Cline lacked terminal access, it defaulted to manually creating all project files. I addressed this by manually adding the cdk.json file.

After reviewing all the files generated by Claude, I proceeded to deploy the service using the CDK CLI. This is when I encountered another issue: the CDK code for error alarms on the Lambda functions was failing. The alarm name was constructed using the alarm name and the lambda.functionName property. At deployment, the lambda.functionName was defined as a token rather than a string, causing the error. I fixed this by altering a Lambda reference array in the API construct. I modified it to include an object for each Lambda that provided both the lambda’s name and the lambda function itself, then updated the alarm creation logic to use the lambda name instead.

Once these issues were resolved, I successfully deployed the service to my AWS test account. My next step was to create a test user in Amazon Cognito and develop a local login script to generate an ID token for a manual Postman request to test the API. However, when I sent a create Product request, it resulted in an internal server error.

Reviewing the CloudWatch logs revealed that the Lambda runtime couldn’t find the handler function. Upon examining the API construct generated by Claude, I discovered that despite my custom instructions to create Lambda functions using the CDK L2 Node.js Lambda construct, Claude had instead used the base CDK L2 Lambda construct. To resolve this, I instructed Claude to modify the code to use the Node.js Lambda Construct along with ESBuild to bundle the TypeScript code.

After deploying the change, I sent another create Product request to the API, only to encounter a new error. A review of the CloudWatch logs revealed that the aws-sdk module was missing. The issue stemmed from Claude using version 2 of the AWS SDK, which was excluded from the packaged Lambda code and unavailable in the Lambda runtime environment. To fix this, I updated the task to replace AWS SDK version 2 with version 3.

With the AWS SDK updated, I redeployed the service to my AWS test account and successfully sent a create Product request to the API 🚀. After reviewing the resources, log outputs, and database entry, everything was functioning as expected. I further validated the API by executing all CRUD operations — and every request was successful! 🚀

CloudWatch Dashboard & Alarms 🚨

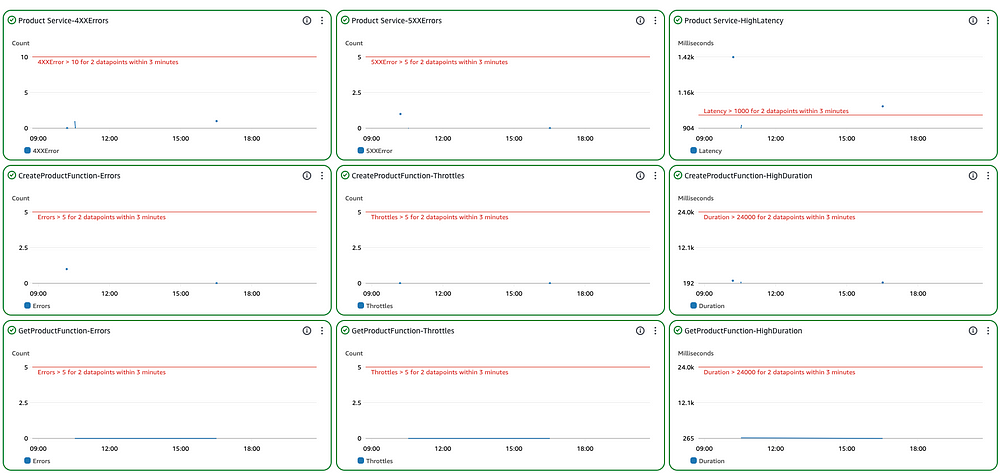

Observability is an essential component of project development that many teams overlook or simply run out of time to implement due to tight deadlines. When I tasked Claude with generating a complete API, I also challenged it to deliver a CloudWatch dashboard — complete with metrics and alarms for the API and Lambda services — to achieve a truly production-ready solution. After successfully deploying and testing all API endpoints, I examined the dashboard to confirm that the recommended metrics and alarms were in place.

While I was pleased with the overall output, I noticed that the dashboard was missing alarm widgets. Although the alarms existed in CloudWatch, they weren’t centrally displayed alongside the other metrics. I then added another prompt to the task, instructing it to incorporate alarm widgets into the dashboard.

“Add the CloudWatch alarms to the CloudWatch dashboard as alarm widgets.”

With the alarm widgets now visible on the dashboard, I still felt something was missing: the metrics for the DynamoDB Product database were not represented, and no alarms had been implemented for DynamoDB. I added another instruction to the task to incorporate these elements into the dashboard.

“Following AWS recommended guidelines, add metrics to the dashboard for the product DynamoDB table. Also create CloudWatch alarms for the Product DynamoDB table based on AWS best practice and recommendations. Add the DynamoDB product database alarms to the CloudWatch dashboard as alarm widgets.”

With the Product Service API successfully deployed, it’s time to run the tests generated by the Claude language model.

Running Unit Tests 🧪

Claude generated all units tests and included the standard npm test task in the package.json file. Initial execution of the Jest unit tests results in one test suite failing.

Rather than manually investigating and fixing the problem with the tests, I decided to ask Claude to fix them for me with the following prompt.

“I have run the unit tests and I am getting test failures in dynamodb-product-repository.ts file. the error is as follows:

FAIL test/unit/adapters/dynamodb-product-repository.test.ts ● Test suite failed to run lib/adapters/dynamodb-product-repository.ts:168:64 — error TS2345: Argument of type ‘{ TableName: string; Key: { PK: string; SK: string; }; UpdateExpression: string; ExpressionAttributeValues: Record<string, any>; ExpressionAttributeNames: Record<string, string>; ReturnValues: string; }’ is not assignable to parameter of type ‘UpdateCommandInput’. Type ‘{ TableName: string; Key: { PK: string; SK: string; }; UpdateExpression: string; ExpressionAttributeValues: Record<string, any>; ExpressionAttributeNames: Record<string, string>; ReturnValues: string; }’ is not assignable to type ‘Omit<UpdateItemCommandInput, “Key” | “Expected” | “ExpressionAttributeValues” | “AttributeUpdates”>’. Types of property ‘ReturnValues’ are incompatible. Type ‘string’ is not assignable to type ‘ReturnValue | undefined’. 168 const result = await this.docClient.send(new UpdateCommand(params)); ~~~~~~ lib/adapters/dynamodb-product-repository.ts:187:64 — error TS2345: Argument of type ‘{ TableName: string; Key: { PK: string; SK: string; }; ReturnValues: string; }’ is not assignable to parameter of type ‘DeleteCommandInput’. Type ‘{ TableName: string; Key: { PK: string; SK: string; }; ReturnValues: string; }’ is not assignable to type ‘Omit<DeleteItemCommandInput, “Key” | “Expected” | “ExpressionAttributeValues”>’. Types of property ‘ReturnValues’ are incompatible. Type ‘string’ is not assignable to type ‘ReturnValue | undefined’. 187 const result = await this.docClient.send(new DeleteCommand(params));”

Claude fixed the errors by making modifications to the dynamodb-product-repository-test.ts file. It’s worth noting that this task took longer to run and incurred higher API call costs because Cline couldn’t inspect the terminal output to confirm if the fixes were effective.

Another issue with the unit tests was the test branch coverage. It was coming in at 50% and failing coverage due to the threshold being set at 80%. I again asked Claude to improve branch coverage with the following prompt.

“The global test coverage is not meeting the 80% threshold. Update the unit tests to provide over 80% test coverage. The report is as follows:

PASS test/unit/validation/product-validation.test.ts (6.564 s) — — — — — — — — | — — — — -| — — — — — | — — — — -| — — — — -| — — — — — — — — — — File | % Stmts | % Branch | % Funcs | % Lines | Uncovered Line #s — — — — — — — — | — — — — -| — — — — — | — — — — -| — — — — -| — — — — — — — — — — All files | 81.98 | 51.78 | 96.42 | 81.76 | adapters | 73.75 | 52.63 | 90 | 73.41 | …ository.ts | 73.75 | 52.63 | 90 | 73.41 | …63,267–273,278 core/models | 100 | 100 | 100 | 100 | product.ts | 100 | 100 | 100 | 100 | handlers | 86.88 | 40 | 100 | 86.88 | …andlers.ts | 86.88 | 40 | 100 | 86.88 | …07,235–236,263 validation | 100 | 100 | 100 | 100 | …idation.ts | 100 | 100 | 100 | 100 | — — — — — — — — | — — — — -| — — — — — | — — — — -| — — — — -| — — — — — — — — — — Jest: “global” coverage threshold for branches (80%) not met: 51.78%”

Claude quickly increased test branch coverage, pushing it beyond the 80% mark.

Postman End to End Tests 🔍

The next phase of evaluation focused on the Postman end-to-end tests executed via Newman. Claude had already incorporated a Postman tests task into the package.json file for this purpose. Based on my previous experience with Jest unit tests, I anticipated errors during the initial run. To my surprise, all Postman tests passed on the first attempt. I only needed to populate a few values in the Postman environment file — specifically, the Product API URL and the test user’s ID Token — making the setup and execution remarkably straightforward.

Final Project Evaluation 🤔

At this point, the Product Service API is production-ready. With additional optimisations — such as API caching, Lambda fine-tuning, granular authorisation, and integrating email notifications with CloudWatch alarms — the generated project is scalable, modular, extensible, maintainable, and aligned with industry standards and cloud best practices. It provides a robust foundation for a production-ready service to manage an organisation’s Product Master data.

In a real-world setting with a seasoned development team, we would approach this project iteratively — developing each resource and endpoint incrementally over a series of sprints. However, the purpose of this blog post is to assess whether Cline and Claude can generate an entire production-ready project, and to evaluate how long it would take and how much it would cost.

Improvements 🔧

The code generated by Claude 3.7 Sonnet adheres to my specifications, delivering a modular, clean, and SOLID codebase that is extensible, maintainable, and self-documented, with robust error handling. However, if I were to restart the project from scratch, I would refine my instructions as follows:

- Instruct Claude to integrate Middy for middleware support across handlers.

- Specify the implementation of more granular logging, including potential log sampling.

- Direct the use of Lambda Power Tools for enhanced metrics, logging, and tracing, integrated through Middy middleware.

Cost Analysis 💰

We’ve reviewed the process of generating the complete Product Service API project, but what about the cost? How long did it take for “me” (or Claude) to create it? The screenshot below displays the overall cost, along with a detailed breakdown of input and output tokens and context window usage.

You can see, the project used 9.7m input tokens, 67.9 thousand output tokens, 108.9k of the 200.0k context window with an overall cost of $30.2262! Thats the total cost of API calls to AWS Bedrock using the Claude 3.7 Sonnet Large Language Model.

Another key metric is the time it took to generate and finalise the project. Remarkably, even on my first attempt using Cline and Claude to create a complete project, the entire process was completed in under two hours!

How does this compare to a scenario where a project is built entirely without AI? Let’s consider the case of a single developer creating the project from scratch. Although developer productivity varies, I’ll use my experience and some average figures to illustrate the difference.

Assume it takes one experienced developer five days to complete the entire project — setting up the project, configuring tools, provisioning infrastructure with the CDK, creating a CloudWatch dashboard with alarms, developing Lambda code for all CRUD endpoints, writing unit tests, and assembling end-to-end Postman tests. In a realistic workday of 8 hours, a developer might only spend about 5 hours actively coding.

Now, consider the cost of that developer. Using the UK average annual salary for a cloud software engineer — let’s take £60,000 as a conservative estimate — and assuming there are 252 working days in a year (8 hours each, totalling 2,016 hours), the hourly rate comes out to roughly £29.76. For a five-day project (40 hours total), the developer’s cost would be around:

£29.76/hour × 40 hours ≈ £1,190.40

Now, contrast this with using AI tools. With Cline and Claude, the same project is generated in just two hours. At £29.76 per hour, two hours cost about £59.52. Adding the cost of AWS Bedrock API calls — approximately £23.62 — brings the total to roughly £83.14.

These rough calculations highlight the significant potential cost and time savings achieved by leveraging AI tools to augment software engineering tasks.

Final Thoughts 🧐

Using Cline and Claude 3.7 Sonnet to generate a complete Product Service API project has been interesting. The project includes unit tests, end-to-end tests, and a CloudWatch dashboard complete with metrics and alarms for all services. The detailed nature and length of this blog post reflects that the process wasn’t without challenges. I encountered minor issues with the Cline plugin’s terminal integration and some quirks in the code and tests generated by Claude.

Other than manually creating a login JavaScript script, setting up a directory for Cline output, and tweaking a naming issue in the CDK Lambda alarm resource, every piece of code and fix came directly from Claude 3.7 Sonnet.

While the cost analysis might suggest that AI can accomplish engineering tasks at a fraction of the expense of human developers, it really highlights the power of augmenting our work with AI tools.

Over the past year, AI has made significant strides. Despite the hype from venture capitalists, YouTube influencers, unicorn company executives, and AI research labs declaring the end of manual software engineering as AI models break coding benchmarks, the true potential of AI in this field will only be unlocked through the creativity, ingenuity, and insight of human engineers.

I’m immensely impressed by Claude 3.7 Sonnet’s output and look forward to using this model — and others like it — to enhance my daily workflow, boost productivity, improve quality, and accelerate the delivery of valuable solutions.

Thanks for reading! 🤙